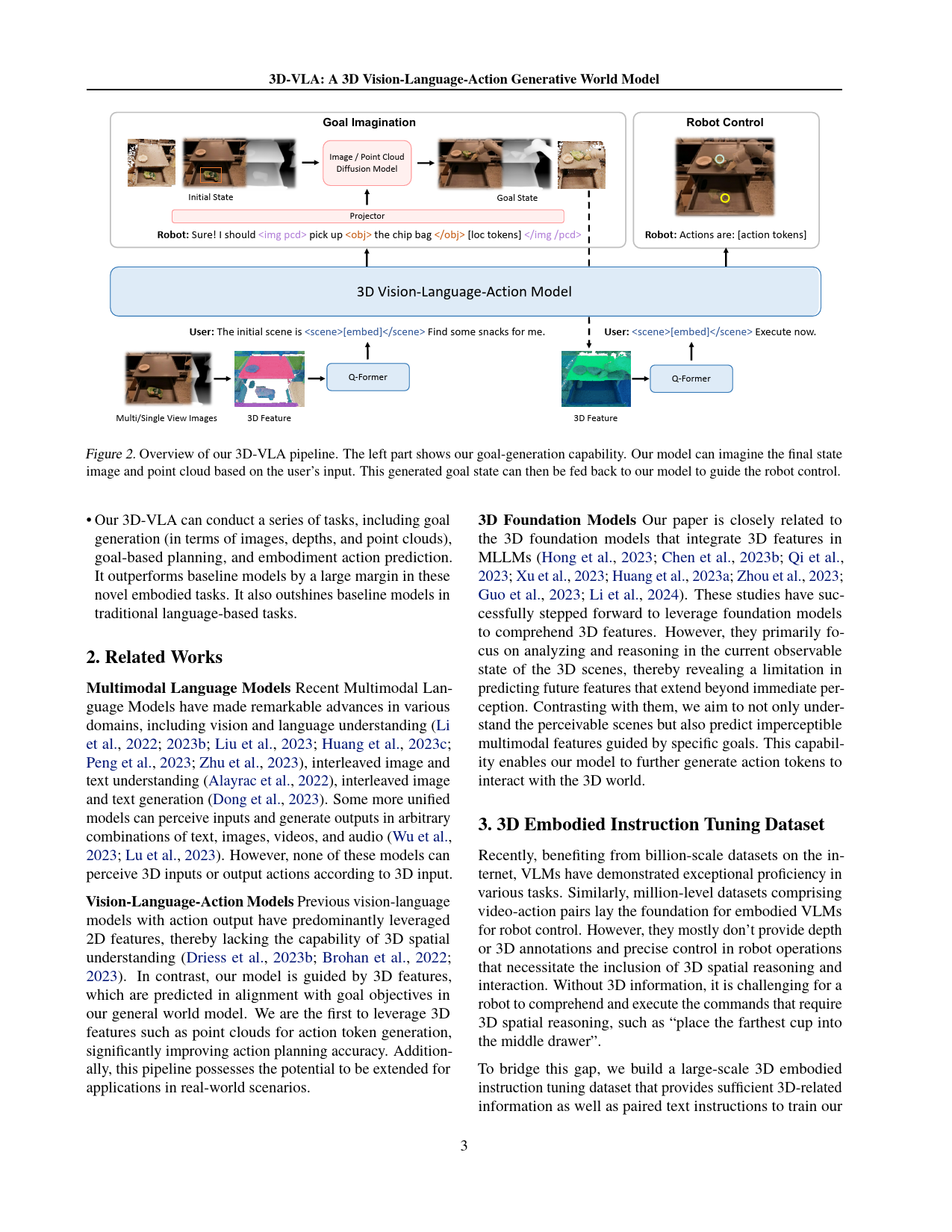

3D-VLA:A3DVision-Language-ActionGenerativeWorldModelHaoyuZhen12XiaowenQiu1PeihaoChen3JinchengYang2XinYan4YilunDu5YiningHong6ChuangGan17https://vis-www.cs.umass.edu/3dvlaarXiv:2403.09631v1[cs.CV]14Mar2024Abstractofthehumanbrain.Such2DfoundationmodelsalsolaythefoundationforrecentembodiedfoundationmodelssuchRecentvision-language-action(VLA)modelsrelyasRT-2(Brohanetal.,2023)andPALM-E(Driessetal.,on2Dinputs,lackingintegrationwiththebroader2023a)thatcouldgeneratehigh-levelplansorlow-levelrealmofthe3Dphysicalworld.Furthermore,actionscontingentontheimages.However,theyneglecttheyperformactionpredictionbylearningadirectthefactthathumanbeingsaresituatedwithinafarric...

发表评论取消回复